Systematic Approaches to Model Evaluation

We need systematic ways to accomplish several key tasks in model evaluation:

- Assess Standard Error

- Set Hyperparameters

- Choose Variables/Features to Include

- Try Less or More "Complex" Models

- Choose Between Different Learning Algorithms

Goal and Context

Our primary objective is to achieve both:

- High R2R^2 (coefficient of determination)

- Strong generalization capability (which enables good performance on new data)

This is particularly crucial when we don't have applicable formulas to guide us.

Core Question

How do we assess predictor performance based purely on data, without relying on formulas?

Validation Process

The validation process follows these steps:

- Data Division: We (randomly) divide our data into two parts:

- Training set

- Validation (hold-out) set

- Model Development: We perform regression on the training set to fit a model using a chosen algorithm.

- Error Assessment:

- Use the validation set to measure prediction errors

- Compare different predictors and algorithms

- Repeat this process systematically for each candidate predictor and algorithm

Model Selection Strategy

- Choose the model structure that performs best on the validation set

- Important consideration: Be aware that the model might have gotten "lucky" and be overfitting the data set

- Final evaluation should be performed on a third data set (test set)

Hyperparameters

Hyperparameters serve two crucial functions:

- They are clearly specified parameters that control the training process

- They are required for model optimization

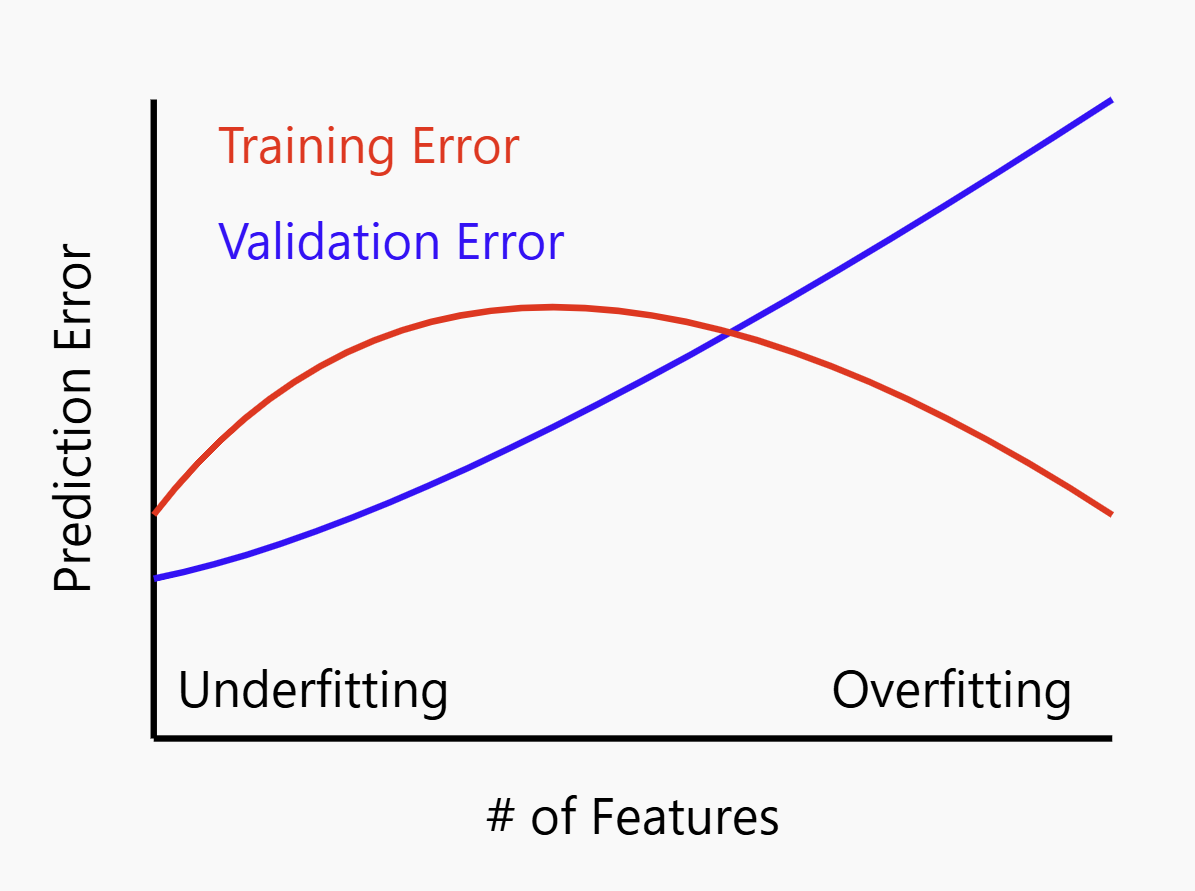

Visual Representation

Let me create a visualization of the relationship between prediction error and number of features:

Comments NOTHING